I live in the same city since seven years. It is not a large one: a small lovely southern German. From time to time, when the time is there, I just love to roam around without a real goal.

Cycling tours

I have two small children, almost as lovely as the German city I live in, when they not busy demolishing our place. I bring them outside, in the forest. Maybe a sheep or two to watch.

One of these idyllic places is located something around three kilometers away from my place; from spring to autumn, once a month I am there with the children. My oldest is six years old: this makes a grand total of over seventy visits. This place is called Listhof.

One of the problem with the Listhof is the following: you ride on a valley with a gentle slope for maybe ten minutes, and then you have to take a left turn and climb a very sharp hill. Not more than fifty meters altitude difference; but with a trailer can be a challenge. In particular if the trailer content starts complaining about the lack of speed. But I love them: month after month the same route. Until yesterday: at the point in which you take the left turn, you can choose to go right; if you know the geography of the place, it is immediately clear that this is going nowhere.

Or does it? Curious, I took this right turn on one of this exploration tours. After less than minutes of a flat ride, unexpected, the Listhof.

Geography is simple, other stuff not

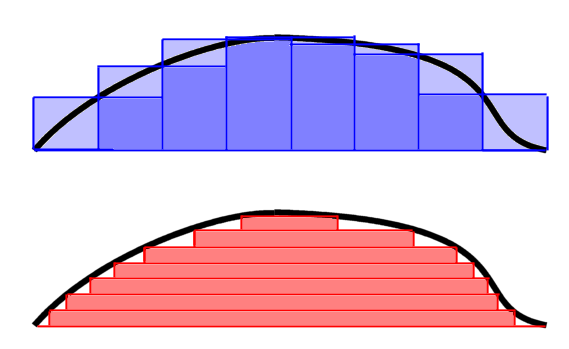

When you think of it, the road topology of small city is a simple business. After seven years, you should have learned it. But surprising facts hide just beyond the grasp of mental laziness. If this is true for simple problems, we can only guess what others treasures are hidden in plain sight in more difficult situations. Here an example from chess programs, a field which I like and contribute to. There is a piece in most of these programs, in which you typically look at all the possible captures of one enemy piece with on yours. In order to save time, it is logical to first check if you can first grab the most valuable enemy piece with the least valuable piece of yours. If you're lucky, you discover that you can safely capture it and don't need to inspect the rest.

This ordering logic is called MVV / LVA (Most Valuable Victim attacks Least Valuable Aggressor). This logic has been used for decades in chess programming. Then, three years ago, in Stockfish, the chess engine to which I contribute, we tested it. And, alas, we discovered that MVV ordering is enough. That was weird. Then we started testing statistically different secondary ordering criteria, searching for improvements; this kind of try random ideas is much more difficult than you can think. Typically, after the five, six idea, you dry up. We were lucky, and I found an improvement after few attempts. Indeed replacing LVA logic by a penalty based on how deep in the enemy lines you are capturing does the job.

Question the assumptions

How many assumptions are you carrying with you all of the time? I give you some questions as an inspiration, and what I discovered over time.

Are you sure you wouldn't like another job, even if you are good at moment?

Some months ago I moved on, and I realized that working with people is at least as good as working with code.

Do you know exactly what your partner likes and what it does not?

I always thought that my wife wants help in finding solutions when she has a big problem. Few years ago, after being married more than five years, I discovered that no, she does not. She typically just wants a kiss and a cup of tea.

How the hell you know you don't like Harry Potter?

I refused to read it till 2009. Then I became addicted.

I can't live without a car!

Two years ago, we had to spend 2000 € on the car for repairs. I was cheap and sold it. We decided, we will wait some time before buying a new one. It turned out, we can live without a car.

Happy exploring!